Large Language Models (LLMs) have captivated the world with their ability to generate human-quality text, translate languages, summarize content, and answer complex questions. Prominent examples include OpenAI’s GPT-3.5, Google’s Gemini, Meta’s Llama2, etc.

As LLMs become more powerful and sophisticated, so does the importance of measuring the performance of LLM-based applications. Evaluating LLMs is essential to ensuring their performance, reliability, and fairness in various NLP applications. In this article, we will explore the needs, challenges, and methods associated with evaluating large language models.

This article was published as a part of the Data Science Blogathon.

As language models continue to advance in their capability and size, researchers, developers, and business users face issues such as model hallucination, toxic, violent response, jailbreaking, etc.

Below two-dimensional landscape represents the frequency and severity of various such issues. Hallucination may be observed frequently, but its severity is lower compared to Jailbreaking and prompt injection. Conversely, the frequency and severity of toxic and violent responses are at a moderate level.

Let’s discuss such issues in detail.

Hallucination is a phenomenon that occurs in Large Language Models (LLMs), where the model generates text that is not factual, coherent, or inconsistent with the input context. While LLMs excel at generating text that appears coherent and contextually relevant, they can also occasionally produce outputs that go beyond the scope of the input context, resulting in hallucinatory or misleading information. For example, in summarization tasks, LLMs may generate summaries that contain information that is not present in the original text.

The generation of toxic, hate-filled, and violent responses from LLMs is a concerning aspect that highlights the ethical challenges. Such responses can be harmful and offensive to individuals and groups. We should evaluate LLM to assess its output quality and appropriateness.

Prompt injection is a technique that involves bypassing filters or manipulating the LLM by using carefully crafted prompts that cause the model to ignore previous instructions or perform unintended actions. This can result in unintended consequences, such as data leakage, unauthorized access, or other security breaches.

Jailbreaking

It typically refers to the generation of content or responses that involve circumventing restrictions, breaking rules, or engaging in unauthorized activities. A common method of jailbreaking is pretending.

Robust LLM evaluation is essential to mitigate such issues and ensures coherent and contextually aligned responses. High-quality LLM evaluation helps in:

Numerous approaches exist for evaluating LLMs. Let’s explore some typical methods for assessing LLM outputs.

The most straightforward and reliable way to evaluate the performance of an LLM is through human evaluation. Human evaluators can assess various aspects of the model’s performance, such as its factual accuracy, coherence, relevance, etc. This can be done through pairwise comparisons, ranking, or assessing the relevance and coherence of the generated text.

Here are some tools that can aid in human evaluation: Argilla (an open-source platform) LabelStudio, and Prodigy.

End-user feedback (user ratings, surveys, platform usage, etc.) provides insights into how well the model performs in real-world scenarios and meets user expectations.

Leveraging the LLM itself to assess the quality of the outputs. It’s a dynamic approach that allows us to create autonomous and adaptive without the need for external evaluation or human intervention.

Frameworks for LLM-based evaluation: LangChain, RAGAS, LangSmith

Benchmarks provide standardized datasets and tasks that enable researchers and practitioners to compare the performance of different LLMs. Benchmark datasets (such as GLUE, SuperGLUE, SQuAD, etc.) cover a range of language tasks, such as question answering, text completion, summarization, translation, and sentiment analysis.

Quantitative metrics such as BLEU score, ROUGE score, Perplexity, etc. measure surface-level similarities between generated text and reference data on specific tasks. However, they may not capture the broader language understanding capabilities of LLMs.

LangChain is an open-source framework for building LLM-powered applications. LangChain offers

various types of evaluators to measure performance and integrity on diverse data.

Each evaluator type in LangChain comes with ready-to-use implementations and an extensible API that allows for customization according to unique requirements. These evaluators can be applied to different chain and LLM implementations in the LangChain library.

Evaluator types in LangChain:

Let’s discuss each evaluator type in detail.

A string evaluator is a component within LangChain designed to assess the performance of a language model by comparing its generated outputs (predictions) to a reference string (ground truth) or an input (such as a question or a prompt). These evaluators can be customized to tailor the evaluation process to fit your application’s specific requirements.

String Evaluator implementations in LangChain:

It allows to verify whether the output from an LLM or a Chain adheres to a specified set of

criteria. Langchain offers various criteria such as correctness, relevance, harmfulness, etc. Below is the complete list of criteria available in Langchain.

from langchain.evaluation import Criteria

print(list(Criteria))

Let’s explore a few examples by employing these predefined criteria.

a. Conciseness

We instantiate the criteria evaluator by invoking the load_evaluator() function and passing criteria as conciseness to check whether the output is concise or not.

from langchain.evaluation import load_evaluator

evaluator = load_evaluator("criteria", criteria="conciseness")

eval_result = evaluator.evaluate_strings(

prediction="""Joe Biden is an American politician

who is the 46th and current president of the United States.

Born in Scranton, Pennsylvania on November 20, 1942,

Biden moved with his family to Delaware in 1953.

He graduated from the University of Delaware

before earning his law degree from Syracuse University.

He was elected to the New Castle County Council in 1970

and to the U.S. Senate in 1972.""",

input="Who is the president of United States?",

)

print(eval_result)

All string evaluators provide an evaluate_strings() method that takes:

The criteria evaluators return a dictionary with the following attributes:

b. Correctness

Correctness criteria requires reference labels. Here we initialize the labeled_criteria evaluator and specify the reference string additionally.

evaluator = load_evaluator("labeled_criteria", criteria="correctness")

eval_result = evaluator.evaluate_strings(

input="Is there any river on the moon?",

prediction="There is no evidence of river on the Moon",

reference="""In a hypothetical future, lunar scientists discovered

an astonishing phenomenon—a subterranean river

beneath the Moon's surface""",

)

print(eval_result)

c. Custom Criteria

We can also define our own custom criteria by passing a dictionary in the form {“criterion_name”: “criterion_description”}.

from langchain.evaluation import EvaluatorType

custom_criteria = {

"numeric": "Does the output contain numeric information?",

"mathematical": "Does the output contain mathematical information?"

}

prompt = "Tell me a joke"

output = """

Why did the mathematician break up with his girlfriend?

Because she had too many "irrational" issues!

"""

eval_chain = load_evaluator(

EvaluatorType.CRITERIA,

criteria=custom_criteria,

)

eval_result = eval_chain.evaluate_strings(prediction = output, input = prompt)

print("===================== Multi-criteria evaluation =====================")

print(eval_result)

Embedding distance measures the semantic similarity (or dissimilarity) between a prediction and

a reference label string. It returns a distance score, signifying that a lower numerical value indicates a higher similarity between the prediction and the reference, based on their embedded representation.

evaluator = load_evaluator("embedding_distance")

evaluator.evaluate_strings(prediction="Total Profit is 04.25 Cr",

reference="Total return is 4.25 Cr")

By default, the Embedding distance evaluator uses cosine distance. Below is the complete list of distances supported.

from langchain.evaluation import EmbeddingDistance

list(EmbeddingDistance)

Probably the simplest way to evaluate an LLM output. We can also relax the exactness when comparing strings.

from langchain.evaluation import ExactMatchStringEvaluator

exact_match_evaluator = ExactMatchStringEvaluator()

exact_match_evaluator = ExactMatchStringEvaluator(ignore_case=True)

exact_match_evaluator.evaluate_strings(

prediction="Data Science",

reference="Data science",

)

JSON evaluators help in parsing the LLM’s string output (specifically JSON output format). Following JSON evaluators provide the functionality to check the model’s output consistency.

a. JSValidityEvaluator

from langchain.evaluation import JsonValidityEvaluator

evaluator = JsonValidityEvaluator()

prediction = '{"name": "Hari", "Exp": 5, "Location": "Pune"}'

result = evaluator.evaluate_strings(prediction=prediction)

print(result)

prediction = '{"name": "Hari", "Exp": 5, "Location": "Pune",}'

result = evaluator.evaluate_strings(prediction=prediction)

print(result)

b. JsonEqualityEvaluator

from langchain.evaluation import JsonEqualityEvaluator

evaluator = JsonEqualityEvaluator()

result = evaluator.evaluate_strings(prediction='{"Exp": 2}', reference='{"Exp": 2.5}')

print(result)

c. JsonEditDistanceEvaluator

from langchain.evaluation import JsonEditDistanceEvaluator

evaluator = JsonEditDistanceEvaluator()

result = evaluator.evaluate_strings(

prediction='{"name": "Ram", "Exp": 2}', reference='{"name": "Rama", "Exp": 2}'

)

print(result)

d. JsonSchemaEvaluator

from langchain.evaluation import JsonSchemaEvaluator

evaluator = JsonSchemaEvaluator()

result = evaluator.evaluate_strings(

prediction='{"name": "Rama", "Exp": 4}',

reference='{"type": "object", "properties": {"name": {"type": "string"},'

'"Exp": {"type": "integer", "minimum": 5}}}',

)

print(result)

Using regex match we can evaluate model predictions against a custom regex.

For example below regex match evaluator checks for the presence of a YYYY-MM-DD string.

from langchain.evaluation import RegexMatchStringEvaluator

evaluator = RegexMatchStringEvaluator()

evaluator.evaluate_strings(

prediction="Joining date is 2021-04-26",

reference=".*\\b\\d{4}-\\d{2}-\\d{2}\\b.*",

)

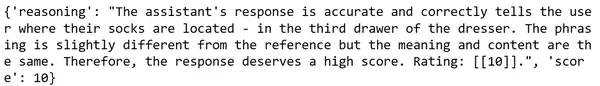

The scoring evaluator helps in assessing the model’s predictions on a specified scale (default is 1-10) based on the custom criteria/rubric. Below is an example of custom accuracy criteria that grades the prediction on a scale of 1 to 10 based on the reference specified.

from langchain.chat_models import ChatOpenAI

from langchain.evaluation import load_evaluator

accuracy_criteria = {

"accuracy": """

Score 1: The answer is completely unrelated to the reference.

Score 3: The answer has minor relevance but does not align with the reference.

Score 5: The answer has moderate relevance but contains inaccuracies.

Score 7: The answer aligns with the reference but has minor errors or omissions.

Score 10: The answer is completely accurate and aligns perfectly with the reference."""

}

evaluator = load_evaluator(

"labeled_score_string",

criteria=accuracy_criteria,

llm=ChatOpenAI(model="gpt-4"),

)

Let’s score different predictions based on the above scoring evaluator.

# Correct

eval_result = evaluator.evaluate_strings(

prediction="You can find them in the dresser's third drawer.",

reference="The socks are in the third drawer in the dresser",

input="Where are my socks?",

)

print(eval_result)

# Correct but lacking information

eval_result = evaluator.evaluate_strings(

prediction="You can find them in the dresser.",

reference="The socks are in the third drawer in the dresser",

input="Where are my socks?",

)

print(eval_result)

# Incorrect

eval_result = evaluator.evaluate_strings(

prediction="You can find them in the dog's bed.",

reference="The socks are in the third drawer in the dresser",

input="Where are my socks?",

)

print(eval_result)

One of the simplest ways to compare the string output of an LLM or chain against a reference label is by using string distance measurements such as Levenshtein or postfix distance.

from langchain.evaluation import load_evaluator

evaluator = load_evaluator("string_distance")

evaluator.evaluate_strings(

prediction="Senior Data Scientist",

reference="Data Scientist",

)

Comparison evaluators in LangChain help measure two different chains or LLM outputs. It is helpful for comparative analyses, such as A/B testing between two language models or comparing two different outputs from the same model.

Comparison Evaluator implementations in LangChain:

This comparison helps in answering questions like –

from langchain.evaluation import load_evaluator

evaluator = load_evaluator("labeled_pairwise_string")

evaluator.evaluate_string_pairs(

prediction="there are 5 days",

prediction_b="7",

input="how many days in a week?",

reference="Seven",

)

A method for gauging the similarity (or dissimilarity) between two predictions made on a common or similar input by embedding the predictions and calculating a vector distance between the two embeddings.

from langchain.evaluation import load_evaluator

evaluator = load_evaluator("pairwise_embedding_distance")

evaluator.evaluate_string_pairs(

prediction="Rajasthan is hot in June", prediction_b="Rajasthan is warm in June."

)

In this article, we discussed about LLM evaluation, its need, and various popular methods for evaluating LLMs. We explored different types of built-in, custom evaluators and different types of criteria offered by

LangChain to assess the LLM’s output quality and appropriateness.

A. The purpose of LLM evaluation is to ensure the performance, reliability, and fairness of Large Language Models (LLMs). It is essential for identifying and addressing issues such as model hallucination, toxic or violent responses, and vulnerabilities like prompt injection and jailbreaking, which can lead to unintended and potentially harmful outcomes.

A. The major challenges in Large Language Model (LLM) evaluation include non-deterministic outputs, difficulty in obtaining a clear ground truth, limitations in applying academic benchmarks to real-world use cases, and the subjectivity and potential bias in human evaluations.

A. The popular ways to evaluate Large Language Models (LLMs) include human evaluation, utilizing end-user feedback through ratings and surveys, employing LLM-based evaluation frameworks like LangChain and RAGAS, leveraging academic benchmarks such as GLUE and SQuAD, and using standard quantitative metrics like BLEU and ROUGE scores.

A. LangChain provides a range of evaluation criteria that include (but not limited to) conciseness, relevance, correctness, coherence, harmfulness, maliciousness, helpfulness, controversiality, misogyny, criminality, insensitivity, depth, creativity, and detail, etc.

The types of comparison evaluators available in LangChain include Pairwise String Comparison, Pairwise Embedding Distance, and Custom Pairwise Evaluator. These tools facilitate comparative analysis, such as A/B testing between language models or assessing different outputs from the same model.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Lorem ipsum dolor sit amet, consectetur adipiscing elit,