In this edition of AV Bytes, we delve into some of the most impactful developments in the AI industry over the past week. From Google’s strategic acquisition of Character.ai to the release of BitNet b1.58, the AI landscape is rapidly evolving with innovations that promise to reshape the future of technology. We also explore the latest advancements in AI infrastructure, tools, and domain-specific models, all of which are driving new capabilities and efficiencies across various sectors.

Join us as we break down these major milestones and what they mean for the future of AI.

Character.ai, renowned for its innovative chatbot technology, has been acquired by Google, marking a significant expansion of Google’s AI capabilities. This deal includes the return of CEO Noam Shazeer to Google and reflects the broader trend of tech giants acquiring AI startups to strengthen their AI portfolios.

BitNet b1.58, a 1-bit LLM where every parameter is ternary {-1, 0, 1}, has been introduced. This approach could potentially allow running large models on devices with limited memory, such as phones.

GitHub has introduced a new feature that allows developers to host AI models directly on the platform, providing a seamless path to experiment with model inference code using Codespaces.

Google’s new Gemma 2 models and Black Forest Labs’ FLUX.1 are pushing the boundaries of what AI can achieve. These models are setting new benchmarks in AI capabilities, demonstrating significant advancements in both efficiency and performance.

PyTorch has released torchchat, a versatile solution for running large language models (LLMs) locally on various devices. Supporting models like Llama 3.1, torchchat offers features for evaluation, quantization, and optimized deployment across different platforms.

LangChain introduced LangGraph Studio, an agent IDE designed for developing LLM applications. It provides visualization, interaction, and debugging tools for complex agentic applications, streamlining the development process.

Compute Express Link (CXL) technology is revolutionizing AI by enhancing memory bandwidth and capacity, addressing one of the most critical constraints in AI development. This technology is vital for creating more powerful and efficient AI models.

Distributed Shampoo has outperformed Nesterov Adam in deep learning optimization, marking a significant advancement in non-diagonal preconditioning.

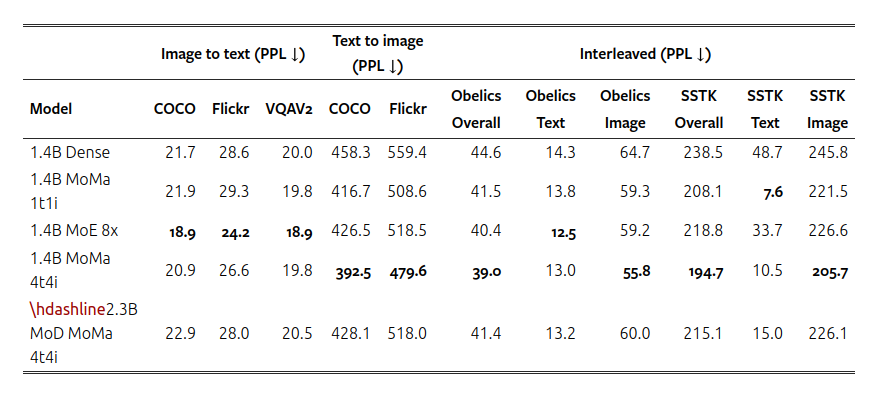

Meta introduced MoMa, a new sparse early-fusion architecture for mixed-modal language modeling that significantly improves pre-training efficiency. MoMa achieves approximately 3x efficiency gains in text training and 5x in image training.

John Snow Labs has launched a no-code tool for responsible AI testing in healthcare, enabling non-technical experts to evaluate custom language models. This tool is crucial for ensuring the safe and effective deployment of AI in healthcare settings.

Multimodal AI, which integrates various data types into unified AI solutions, is gaining momentum. This approach is particularly beneficial in fields like healthcare and law, where diverse data types are common.

The rise of domain-specific AI models offers tailored solutions for industries like healthcare and law. These models are designed to meet the unique needs of specific domains, providing more accurate and relevant insights.

Quantum computing is poised to revolutionize AI by providing faster computation and more powerful algorithms. This technology opens new research and application avenues, potentially transforming fields that require complex computations.

Apple has launched “Apple Intelligence,” a suite of AI features aimed at enhancing services like Siri and automating various tasks. This suite includes advanced machine learning models and natural language processing capabilities, positioning Apple as a significant player in the AI space.

The National Telecommunications and Information Administration (NTIA) issued a report advocating for the openness of AI models while recommending risk monitoring. This report, which directly influences White House policy, could shape future AI regulations in the United States.

A debate emerged around the effectiveness of watermarking in solving trust issues in AI. Some argued that watermarking only works in institutional settings and cannot prevent misuse entirely. The discussion highlighted the need for better cultural norms and trust mechanisms to address the spread of deepfakes and misrepresented content.

As AI continues to advance at an unprecedented pace, the developments highlighted in this edition of AV Bytes underscore the transformative impact these technologies are having across industries. From Google’s strategic moves to innovations in AI infrastructure and domain-specific applications, the progress made in just a week is a testament to the field’s dynamism. As we move forward, these advancements will not only reshape industries but also redefine the possibilities of what AI can achieve, paving the way for a future where technology and human ingenuity converge in new and exciting ways.

Stay tuned for more updates and insights in the world of artificial intelligence in the next edition of our AI News Blog!

Lorem ipsum dolor sit amet, consectetur adipiscing elit,